It has been an incredible week in the world of AI Search. Google announced that over a billion people have used AI Overviews.

I find this amazing, especially as Google were late to the game, launching AI Overviews in 2024 to a limited audience and a full year after Bing integrated with OpenAI.

Today, over 52% of all Google searches have an AI Overview. That’s 52% of all searches where the traditional SERPS hasn’t been visible, replaced, at least on a cellphone, with the AI-driven response.

Moreover, research has shown that 70% of the AI overviews do not refer to any of the top twelve ranking search results in the traditional results.

It’s clear, the technology is here to stay.

However, we’re in a transition, and the use of AI is highlighting the challenges of data quality and information accuracy on the Internet.

Take Reddit, for example. Tens of thousands of people have posted and voted on their frustration with AI Overviews.

The challenge isn’t with the AI models that support overviews but rather the quality of the content. However, this doesn’t help if your brand is being represented inappropriately.

The good news is that with the right information, it is possible to gain authority and trustworthiness in the eyes of the AI.

In the meantime, the first step in all our strategies to master GEO must be to stay on top of the content presented in the AI Overview and where it is sourcing the information.

Importance of Strengthening Your Content’s Authority and Trust

Have you recently run a Google search, and the AI Overview just doesn’t make sense or is contradictory?

Yes, you’re not alone.

It is easy to criticise AI and its model; however, the quality of the result lies elsewhere. Content quality, authority, and trustworthiness are perhaps a better place to start driving answer quality.

Check out our article on how Large Language Models (LLMs), the technology behind AI, and ChatGPT work.

When I sat down to write this newsletter, I wanted to find an example that demonstrated erroneous content quality and where to start improving responses.

I headed over to Google and used the following search keywords:

is the hard mountain dew gluten free

I’m based in the UK, so your search results may differ from mine.

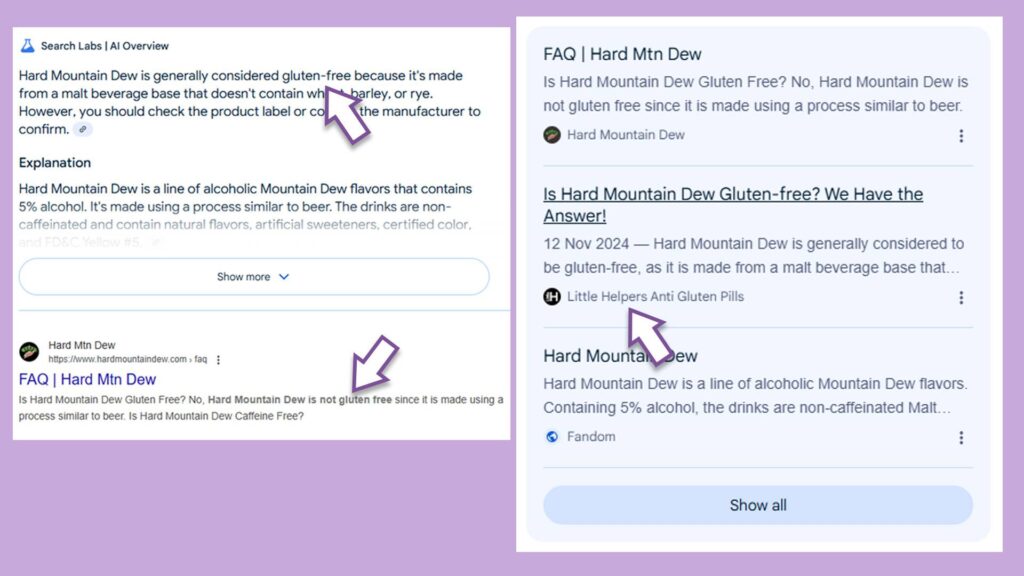

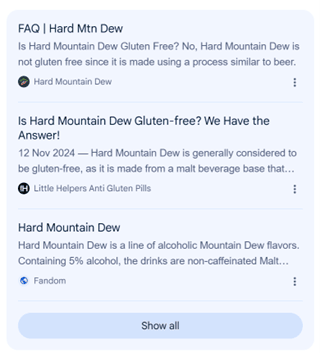

As is common these days, the AI Overview popped up first. However, I want to share the top of the SERPs with you:

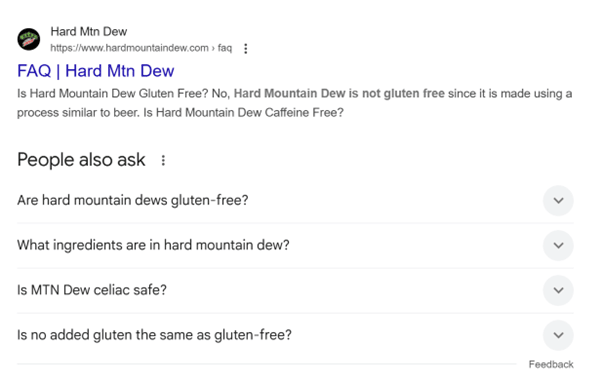

Highlighted in bold, the first result clearly identifies that the drink is not gluten free.

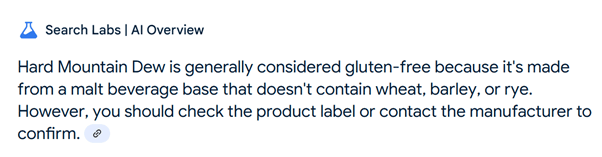

However, we look at the AI Overview:

We’re presented with a very different message.

But why?

The AI model has a vocabulary of words coupled with rules that describe how the words are related. When the context “the input,” which will include the body of web pages found in the SERPs, is passed to the model, the model will predict the most likely next word in the sequence (that is, the answer). It will do this repeatedly until the complete response is generated.

It doesn’t know what gluten is, only that it is a word in its vocabulary associated with other words like free and contains.

Of the three web pages processed by the model, one reported that the beverage contained gluten, one contradicted this, and the third made no declaration either way. Collectively, though, the weight of the last two articles was greater than the first, and so their message became the presiding content cited by the AI.

So, what can we do to overcome this?

- We need to expand our SEO strategy to encompass other websites.

- We need to identify what websites are deemed more important than others by the AI.

- We need to correct data quality and content issues and drive a consistent message across all cited sources.

We’re working on this problem. Get the insight and action recommendations we all need to master the narrative presented by AI Overviews.